Geospatial and Gridded Data with Xarray¶

Xarray is a powerful tool for exploring multi-dimensional (esp. geospatial) data in a way that is efficient and robust to making coding mistakes. Pandas provided us with a way to look at tabular data, Xarray takes this further and provides a framework for N-dimensional data. One of the coolest Xarray features, is the integration with Dask. This will allow us to easily parallelize our Xarray analysis!

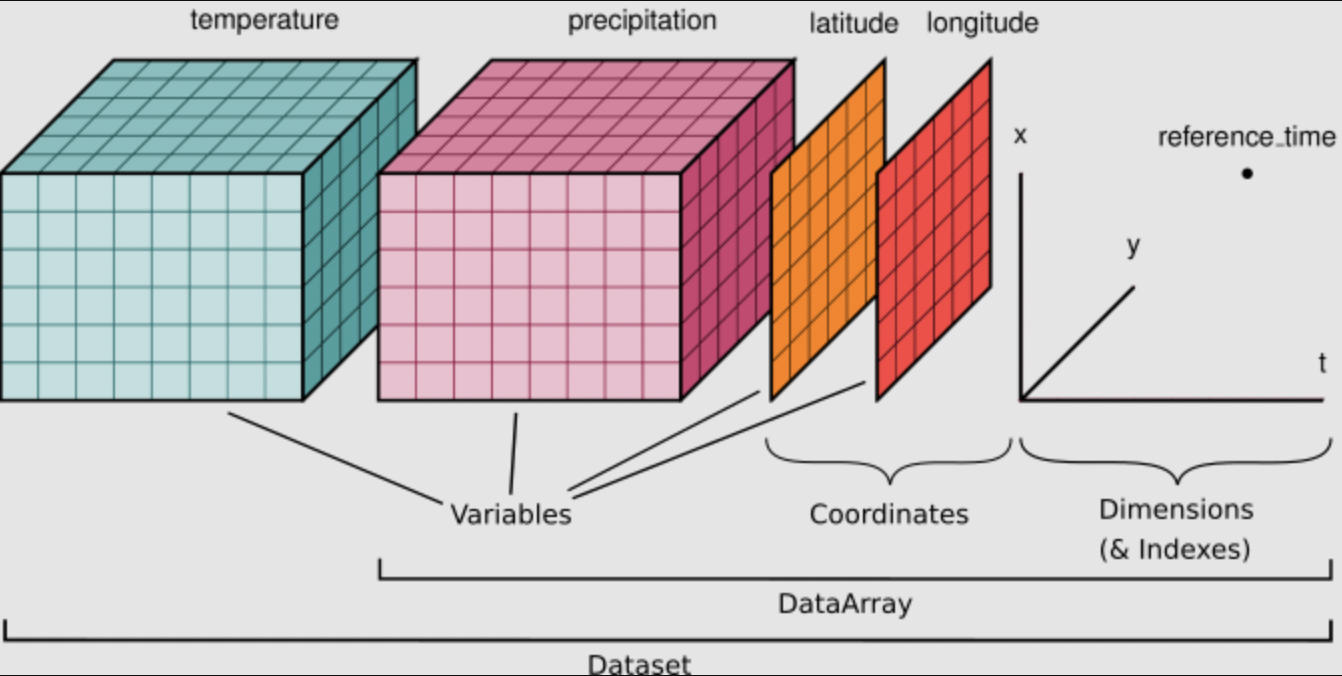

When using Xarray, our data will be stored in DataArrays which are collected together into a DataSet. A nice example of this is in the context of a climate model:

DataSet - contains all possible coordinates on the model grid and provides a list of all model variables (DataArrays)

DataArray - an individual model variable (e.g., sea surface temperature), the variable’s coordinates on the model grid, and any additional meta data about that specific variable

In the graphic below, you can see that temperature and precipitation are both variables with coordinates lat and lon. In this simple example temperature and precip each have 3 dimensions. Not only does the DataSet store all of this information, it also relates these two variables to each other by understanding that they share coordinates lat and lon. We will use some test data to inspect this further. Any NetCDF can be read into an Xarray dataset. Many popular models

facilitate reading raw output directly into Xarray.

[1]:

import matplotlib.pyplot as plt

import xarray as xr

# load a sample dataset from the xarray library

ds = xr.tutorial.load_dataset("air_temperature")

TIP If you realize you need to use Pandas for a particular problem, never fear! You can convert between Pandas and Xarray with a single line of code. Pandas has lots of built in tools that are great for manipulating dates and text, so you may want to use Pandas and Xarray together.

[2]:

df = ds.to_dataframe() # convert xarray dataset to pandas dataframe

df.to_xarray() # to xarray from dataframe

[2]:

<xarray.Dataset> Size: 31MB

Dimensions: (time: 2920, lat: 25, lon: 53)

Coordinates:

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

* lat (lat) float32 100B 75.0 72.5 70.0 67.5 65.0 ... 22.5 20.0 17.5 15.0

* lon (lon) float32 212B 200.0 202.5 205.0 207.5 ... 325.0 327.5 330.0

Data variables:

air (time, lat, lon) float64 31MB 241.2 242.5 243.5 ... 296.2 295.7Inspecting our DataSet¶

[3]:

ds

[3]:

<xarray.Dataset> Size: 31MB

Dimensions: (time: 2920, lat: 25, lon: 53)

Coordinates:

* lat (lat) float32 100B 75.0 72.5 70.0 67.5 65.0 ... 22.5 20.0 17.5 15.0

* lon (lon) float32 212B 200.0 202.5 205.0 207.5 ... 325.0 327.5 330.0

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

Data variables:

air (time, lat, lon) float64 31MB 241.2 242.5 243.5 ... 296.2 295.7

Attributes:

Conventions: COARDS

title: 4x daily NMC reanalysis (1948)

description: Data is from NMC initialized reanalysis\n(4x/day). These a...

platform: Model

references: http://www.esrl.noaa.gov/psd/data/gridded/data.ncep.reanaly...We can see that our variable air has 3 dimensions (lat, lon, time) defined by coordinates of the same name. We can inspect each of those coordinates to see their ranges.

[4]:

ds.lon

[4]:

<xarray.DataArray 'lon' (lon: 53)> Size: 212B

array([200. , 202.5, 205. , 207.5, 210. , 212.5, 215. , 217.5, 220. , 222.5,

225. , 227.5, 230. , 232.5, 235. , 237.5, 240. , 242.5, 245. , 247.5,

250. , 252.5, 255. , 257.5, 260. , 262.5, 265. , 267.5, 270. , 272.5,

275. , 277.5, 280. , 282.5, 285. , 287.5, 290. , 292.5, 295. , 297.5,

300. , 302.5, 305. , 307.5, 310. , 312.5, 315. , 317.5, 320. , 322.5,

325. , 327.5, 330. ], dtype=float32)

Coordinates:

* lon (lon) float32 212B 200.0 202.5 205.0 207.5 ... 325.0 327.5 330.0

Attributes:

standard_name: longitude

long_name: Longitude

units: degrees_east

axis: X[5]:

ds.time

[5]:

<xarray.DataArray 'time' (time: 2920)> Size: 23kB

array(['2013-01-01T00:00:00.000000000', '2013-01-01T06:00:00.000000000',

'2013-01-01T12:00:00.000000000', ..., '2014-12-31T06:00:00.000000000',

'2014-12-31T12:00:00.000000000', '2014-12-31T18:00:00.000000000'],

shape=(2920,), dtype='datetime64[ns]')

Coordinates:

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

Attributes:

standard_name: time

long_name: TimeAttributes (attrs) provide additional meta-data in the form of strings.

[6]:

ds.attrs

[6]:

{'Conventions': 'COARDS',

'title': '4x daily NMC reanalysis (1948)',

'description': 'Data is from NMC initialized reanalysis\n(4x/day). These are the 0.9950 sigma level values.',

'platform': 'Model',

'references': 'http://www.esrl.noaa.gov/psd/data/gridded/data.ncep.reanalysis.html'}

[7]:

ds.lon.attrs

[7]:

{'standard_name': 'longitude',

'long_name': 'Longitude',

'units': 'degrees_east',

'axis': 'X'}

Selecting (Slicing) Sub-sets of Data with Labels¶

Xarray is great for doing reproducible science! Instead of having to remember or hard-code the order and size of your data dimensions, you can select subsets of data by their names and values. isel() is used to select by index, and sel() is used to select by dimension label and value.

[8]:

# Select the first day

ds.air.isel(time=0)

[8]:

<xarray.DataArray 'air' (lat: 25, lon: 53)> Size: 11kB

array([[241.2 , 242.5 , 243.5 , ..., 232.8 , 235.5 , 238.6 ],

[243.8 , 244.5 , 244.7 , ..., 232.8 , 235.3 , 239.3 ],

[250. , 249.8 , 248.89, ..., 233.2 , 236.39, 241.7 ],

...,

[296.6 , 296.2 , 296.4 , ..., 295.4 , 295.1 , 294.7 ],

[295.9 , 296.2 , 296.79, ..., 295.9 , 295.9 , 295.2 ],

[296.29, 296.79, 297.1 , ..., 296.9 , 296.79, 296.6 ]],

shape=(25, 53))

Coordinates:

* lat (lat) float32 100B 75.0 72.5 70.0 67.5 65.0 ... 22.5 20.0 17.5 15.0

* lon (lon) float32 212B 200.0 202.5 205.0 207.5 ... 325.0 327.5 330.0

time datetime64[ns] 8B 2013-01-01

Attributes:

long_name: 4xDaily Air temperature at sigma level 995

units: degK

precision: 2

GRIB_id: 11

GRIB_name: TMP

var_desc: Air temperature

dataset: NMC Reanalysis

level_desc: Surface

statistic: Individual Obs

parent_stat: Other

actual_range: [185.16 322.1 ][9]:

# Extract data at the longitude that is nearest 220.0E

ds.air.sel(lon=220.0,method='nearest')

[9]:

<xarray.DataArray 'air' (time: 2920, lat: 25)> Size: 584kB

array([[242.5 , 241.1 , 242.2 , ..., 292.79, 293.79, 295.5 ],

[244.3 , 242.2 , 242.1 , ..., 293. , 294.2 , 295.79],

[246.8 , 242.39, 243.7 , ..., 292.29, 293. , 295. ],

...,

[235.99, 241.89, 251.29, ..., 296.29, 297.69, 298.29],

[237.09, 239.89, 250.29, ..., 296.49, 297.89, 298.29],

[238.89, 238.59, 246.59, ..., 297.19, 297.69, 298.09]],

shape=(2920, 25))

Coordinates:

* lat (lat) float32 100B 75.0 72.5 70.0 67.5 65.0 ... 22.5 20.0 17.5 15.0

lon float32 4B 220.0

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

Attributes:

long_name: 4xDaily Air temperature at sigma level 995

units: degK

precision: 2

GRIB_id: 11

GRIB_name: TMP

var_desc: Air temperature

dataset: NMC Reanalysis

level_desc: Surface

statistic: Individual Obs

parent_stat: Other

actual_range: [185.16 322.1 ][10]:

# Extract a sub-region of data by selecting slices of latitude and longitude

ds.air.sel(lat=slice(60, 20), lon=slice(200, 240)) # keep in mind that latitude is decreasing -- the slice is from 60 to 20

[10]:

<xarray.DataArray 'air' (time: 2920, lat: 17, lon: 17)> Size: 7MB

array([[[273.7 , 273.6 , 273.79, ..., 273. , 275.5 , 276. ],

[274.79, 275.2 , 275.6 , ..., 270.2 , 272.79, 274.9 ],

[275.9 , 276.9 , 276.9 , ..., 271.1 , 271.6 , 272.79],

...,

[295.4 , 295.7 , 295.79, ..., 290.2 , 290. , 289.9 ],

[297. , 296.7 , 296.1 , ..., 290.79, 290.9 , 290.7 ],

[296.6 , 296.2 , 296.4 , ..., 292. , 292.1 , 291.79]],

[[272.1 , 272.7 , 273.2 , ..., 270.2 , 272.79, 273.6 ],

[274. , 274.4 , 275.1 , ..., 267. , 270.29, 272.5 ],

[275.6 , 276.1 , 276.29, ..., 267.79, 269.2 , 270.6 ],

...,

[295.1 , 295.79, 295.79, ..., 290.2 , 290.29, 289.79],

[295.79, 295.79, 296.1 , ..., 290.6 , 290.79, 290.6 ],

[296.4 , 295.9 , 296.2 , ..., 292.1 , 292.2 , 292.29]],

[[275.5 , 275.9 , 275.29, ..., 267.4 , 271. , 272.1 ],

[274.29, 275. , 275.4 , ..., 265.1 , 269. , 271.6 ],

[274.5 , 275.6 , 276.1 , ..., 266.79, 267.79, 268.7 ],

...,

...

[291.39, 292.49, 294.39, ..., 290.89, 290.69, 290.29],

[292.99, 293.49, 295.99, ..., 292.19, 292.09, 292.29],

[294.79, 295.29, 297.49, ..., 294.39, 293.99, 294.49]],

[[274.29, 273.89, 274.29, ..., 273.19, 274.79, 273.49],

[275.59, 276.29, 277.49, ..., 268.79, 272.09, 272.89],

[276.89, 277.89, 278.69, ..., 263.69, 264.59, 266.59],

...,

[290.89, 291.49, 293.19, ..., 291.69, 291.39, 290.79],

[291.59, 291.69, 293.59, ..., 292.79, 292.59, 292.59],

[293.69, 293.89, 295.39, ..., 295.09, 294.59, 295.09]],

[[272.59, 271.99, 272.19, ..., 274.19, 275.39, 273.89],

[274.29, 274.49, 275.59, ..., 269.39, 272.89, 274.69],

[276.79, 277.49, 277.99, ..., 264.59, 266.89, 269.69],

...,

[291.49, 291.39, 292.39, ..., 291.59, 291.19, 290.99],

[292.89, 292.09, 292.99, ..., 293.49, 292.89, 292.89],

[293.79, 293.69, 295.09, ..., 295.39, 294.79, 294.79]]],

shape=(2920, 17, 17))

Coordinates:

* lat (lat) float32 68B 60.0 57.5 55.0 52.5 50.0 ... 27.5 25.0 22.5 20.0

* lon (lon) float32 68B 200.0 202.5 205.0 207.5 ... 235.0 237.5 240.0

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

Attributes:

long_name: 4xDaily Air temperature at sigma level 995

units: degK

precision: 2

GRIB_id: 11

GRIB_name: TMP

var_desc: Air temperature

dataset: NMC Reanalysis

level_desc: Surface

statistic: Individual Obs

parent_stat: Other

actual_range: [185.16 322.1 ]Interpolating (or Resampling) Data¶

[11]:

# interpolate the air temperature to a new longitude and latitude

ds.air.interp(lon=220.7,lat=42.1)

[11]:

<xarray.DataArray 'air' (time: 2920)> Size: 23kB

array([285.786112, 285.416688, 284.80704 , ..., 286.6092 , 285.74424 ,

285.62024 ], shape=(2920,))

Coordinates:

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

lon float64 8B 220.7

lat float64 8B 42.1

Attributes:

long_name: 4xDaily Air temperature at sigma level 995

units: degK

precision: 2

GRIB_id: 11

GRIB_name: TMP

var_desc: Air temperature

dataset: NMC Reanalysis

level_desc: Surface

statistic: Individual Obs

parent_stat: Other

actual_range: [185.16 322.1 ][12]:

import numpy as np

# interpolate to a particular set of longitudes and latitudes (increase the resolution)

new_lats = np.arange(ds.lat.min(), ds.lat.max(), 0.1) # our existing latitudes are every 0.5 deg, lets make that 0.1

new_lons = np.arange(ds.lon.min(), ds.lon.max(), 0.5) # our existing longitudes are every 2.5 deg, lets make that 0.5

ds.air.interp(lat=new_lats, lon=new_lons, method='linear') # let's do linear interpolation

[12]:

<xarray.DataArray 'air' (time: 2920, lat: 600, lon: 260)> Size: 4GB

array([[[296.29 , 296.39 , 296.49 , ..., 296.714 ,

296.676 , 296.638 ],

[296.27439994, 296.37279993, 296.47119993, ..., 296.67023983,

296.62815982, 296.5860798 ],

[296.25879988, 296.35559987, 296.45239986, ..., 296.62647967,

296.58031964, 296.5341596 ],

...,

[241.51176315, 241.75737408, 242.00298502, ..., 236.75918542,

237.40076903, 238.04235263],

[241.40776276, 241.65817371, 241.90858466, ..., 236.7527854 ,

237.38716898, 238.02155255],

[241.30376236, 241.55897333, 241.8141843 , ..., 236.74638538,

237.37356892, 238.00075247]],

[[296.29 , 296.472 , 296.654 , ..., 296.48 ,

296.52 , 296.56 ],

[296.28639999, 296.46511997, 296.64383996, ..., 296.43439983,

296.46959981, 296.50479979],

[296.28279997, 296.45823995, 296.63367992, ..., 296.38879965,

296.41919962, 296.44959958],

...

[246.28162651, 246.09121922, 245.90081194, ..., 244.1781002 ,

244.36208199, 244.54606377],

[246.11762589, 245.92401859, 245.73041129, ..., 244.22210037,

244.39808212, 244.57406387],

[245.95362526, 245.75681795, 245.56001064, ..., 244.26610054,

244.43408226, 244.60206398]],

[[297.69 , 297.77 , 297.85 , ..., 295.99 ,

295.89 , 295.79 ],

[297.62599976, 297.70919977, 297.79239978, ..., 295.96999992,

295.86999992, 295.76999992],

[297.56199951, 297.64839954, 297.73479956, ..., 295.94999985,

295.84999985, 295.74999985],

...,

[245.66556274, 245.5103591 , 245.35515546, ..., 241.51886923,

241.60524919, 241.69162915],

[245.47356201, 245.31675836, 245.15995471, ..., 241.54926935,

241.62684927, 241.7044292 ],

[245.28156128, 245.12315762, 244.96475397, ..., 241.57966946,

241.64844936, 241.71722925]]], shape=(2920, 600, 260))

Coordinates:

* time (time) datetime64[ns] 23kB 2013-01-01 ... 2014-12-31T18:00:00

* lat (lat) float64 5kB 15.0 15.1 15.2 15.3 15.4 ... 74.6 74.7 74.8 74.9

* lon (lon) float64 2kB 200.0 200.5 201.0 201.5 ... 328.5 329.0 329.5

Attributes:

long_name: 4xDaily Air temperature at sigma level 995

units: degK

precision: 2

GRIB_id: 11

GRIB_name: TMP

var_desc: Air temperature

dataset: NMC Reanalysis

level_desc: Surface

statistic: Individual Obs

parent_stat: Other

actual_range: [185.16 322.1 ]TIP xarray also has a function called interp_like which allows you to interpolate one DataArray to the coordinates of another DataArray.

Plotting in Xarray (so easy!)¶

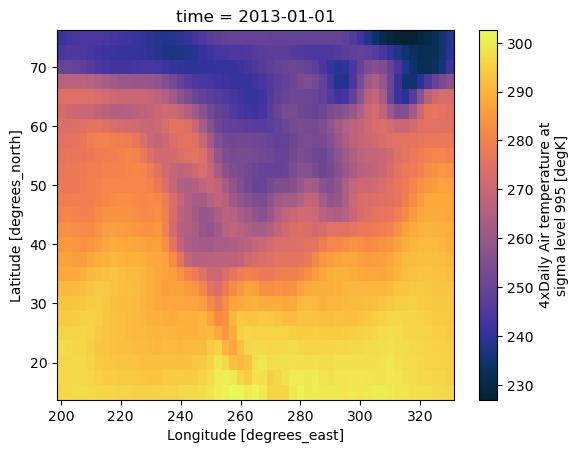

[13]:

ds.air.isel(time=0).plot()

[13]:

<matplotlib.collections.QuadMesh at 0x30efb7380>

[14]:

import cmocean.cm as cmo

# Let's use a non-default colormap

ds.air.isel(time=0).plot(cmap=cmo.thermal)

[14]:

<matplotlib.collections.QuadMesh at 0x30fe09bd0>

[15]:

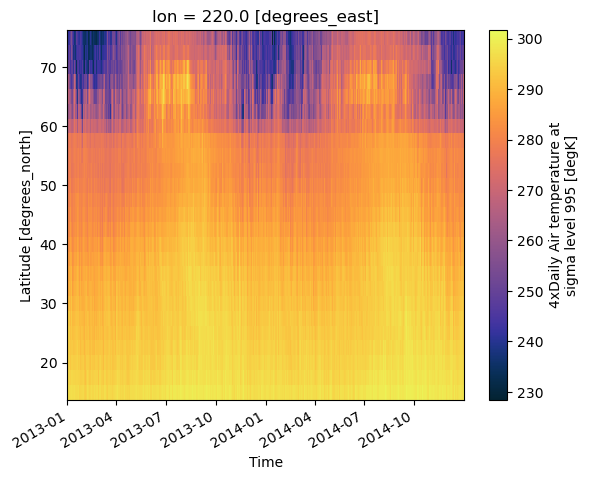

ds.air.sel(lon=220.0,method='nearest').plot(y='lat',x='time',cmap=cmo.thermal)

[15]:

<matplotlib.collections.QuadMesh at 0x30ff10a50>

Statistics in Xarray¶

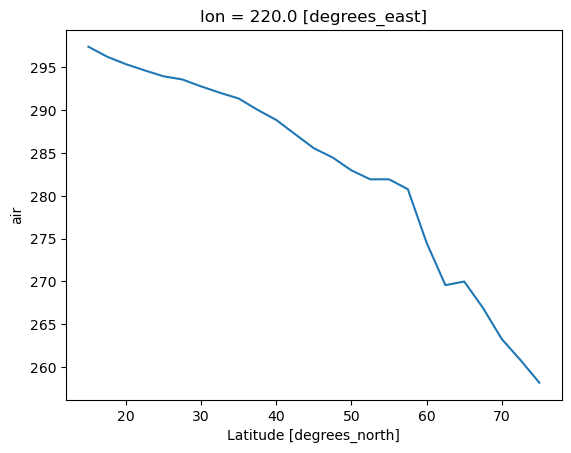

[16]:

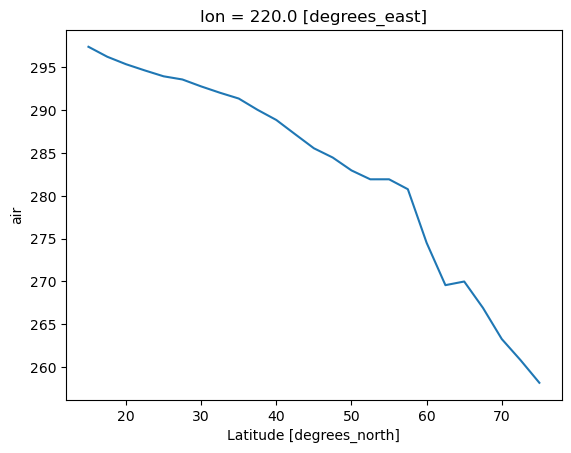

ds.air.sel(lon=220.0,method='nearest').mean(dim='time').plot()

[16]:

[<matplotlib.lines.Line2D at 0x320016490>]

[17]:

del ds

EOF decomposition (a.k.a. PCA) with xeofs¶

Here we will show a brief tutorial on a package called ``xeofs` <https://xeofs.readthedocs.io/en/latest/content/user_guide/quickstart.html>`__ which computes Empirical Orthogonal Functions (EOFs) over Xarray DataArrays. EOF analysis is also sometimes called modal decomposition or PCA. We do not expect everyone to know the underlying mathematical principles. Perhaps you never need this, perhaps you use it all of the time already, perhaps you will learn it in the future. Because it is such a common analysis tool in climate and geosciences, we will spend about 10 minutes on it here.

If you are not interested in using EOFs, this can serve as an example of one of the many software packages for data analysis with Xarray.

[1]:

import xarray as xr

import xeofs as xe

# we will use the same air temperature data set

t2m = xr.tutorial.open_dataset("air_temperature")

# choose a model to use. this will dictate what kind of EOF analysis we will perform.

# we want to weight our data by the cosine of latitude, so we will use the EOF model with coslat weighting.

model = xe.single.EOF(use_coslat=True)

# fit the model to the data (i.e., compute the EOFs), by default this will give us 2 EOFs

model.fit(t2m, dim="time")

model

[1]:

<xeofs.single.eof.EOF at 0x167ee16a0>

[2]:

# we can look at how much variance each EOF explains

model.explained_variance_ratio()

[2]:

<xarray.DataArray 'explained_variance_ratio' (mode: 2)> Size: 16B

array([0.78049922, 0.03021367])

Coordinates:

* mode (mode) int64 16B 1 2

Attributes: (12/15)

model: EOF analysis

software: xeofs

version: 3.0.4

date: 2025-08-20 21:04:56

n_modes: 2

center: True

... ...

sample_name: sample

feature_name: feature

random_state: None

compute: True

solver: auto

solver_kwargs: {}This shows us that the first EOF explains 78% of the variance while the second one only explains 3%…What if we ask for more modes?

[3]:

model = xe.single.EOF(use_coslat=True,n_modes=4)

model.fit(t2m, dim="time") # fit the model to the data and return 4 EOFs

model.explained_variance_ratio()

[3]:

<xarray.DataArray 'explained_variance_ratio' (mode: 4)> Size: 32B

array([0.78049922, 0.03021367, 0.02367857, 0.02009094])

Coordinates:

* mode (mode) int64 32B 1 2 3 4

Attributes: (12/15)

model: EOF analysis

software: xeofs

version: 3.0.4

date: 2025-08-20 21:04:58

n_modes: 4

center: True

... ...

sample_name: sample

feature_name: feature

random_state: None

compute: True

solver: auto

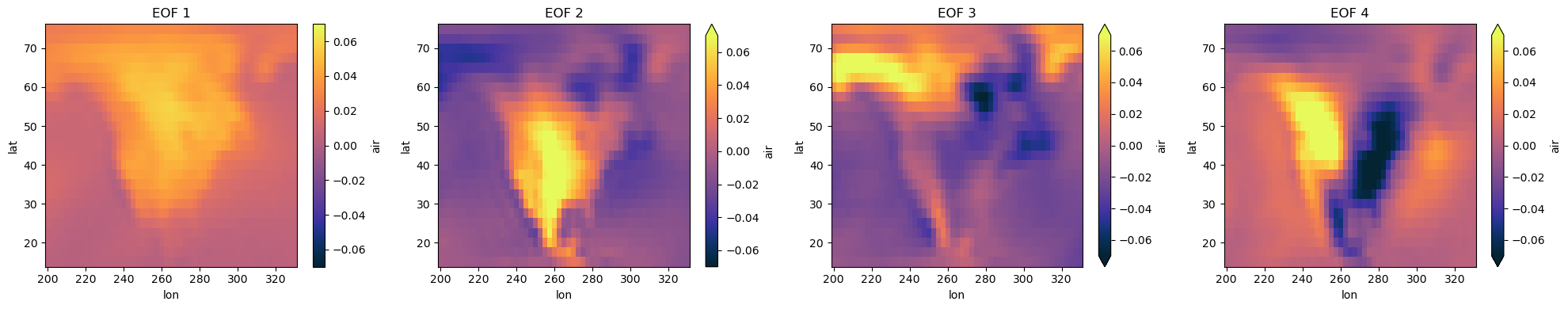

solver_kwargs: {}As we can see the significance of the modes is just decreasing. Now lets examine the spatial patterns in the EOFs themselves. We do this by grabbing the components.

[4]:

components = model.components() # we should see our 4 EOFs (we have 4 principal components)

components

[4]:

<xarray.Dataset> Size: 43kB

Dimensions: (lat: 25, lon: 53, mode: 4)

Coordinates:

* lat (lat) float32 100B 15.0 17.5 20.0 22.5 25.0 ... 67.5 70.0 72.5 75.0

* lon (lon) float32 212B 200.0 202.5 205.0 207.5 ... 325.0 327.5 330.0

* mode (mode) int64 32B 1 2 3 4

Data variables:

air (mode, lat, lon) float64 42kB 0.003083 0.002989 ... 0.0004281[5]:

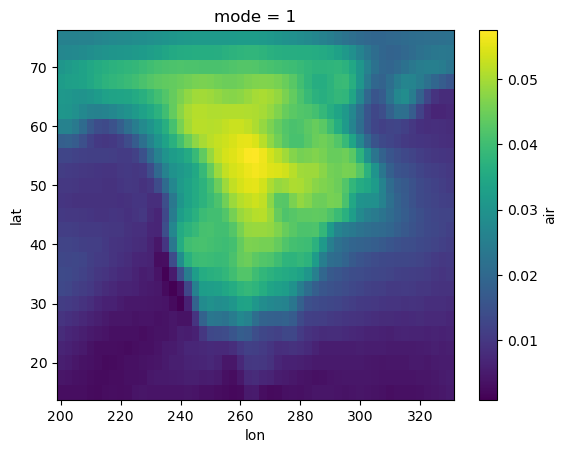

# Lets plot the first EOF

components["air"].sel(mode=1).plot()

[5]:

<matplotlib.collections.QuadMesh at 0x31bdcd550>

[6]:

import matplotlib.pyplot as plt

import cmocean.cm as cmo

# Maybe we want to look at all EOFs

fig, ax = plt.subplots(nrows=1, ncols=4, figsize=(20, 4))

for i in range(4):

components["air"].sel(mode=i+1).plot(ax=ax[i], cmap=cmo.thermal, vmin=-0.07, vmax=0.07)

ax[i].set_title(f"EOF {i+1}")

plt.tight_layout()

Keep in mind that is still up to you, the scientist, to decide do these results look reasonable? Did you take the EOF in the appropriate dimension? What are meaningful units for your colorbars? Explore the tutorials on the xeofs docs to see examples for significance testing, multivariate EOF, Hilbert EOF, sparse EOF, and much much more.

Advanced Operations with Gridded Data (xgcm)¶

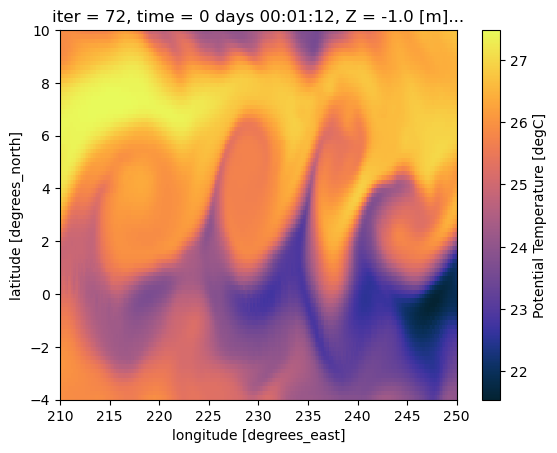

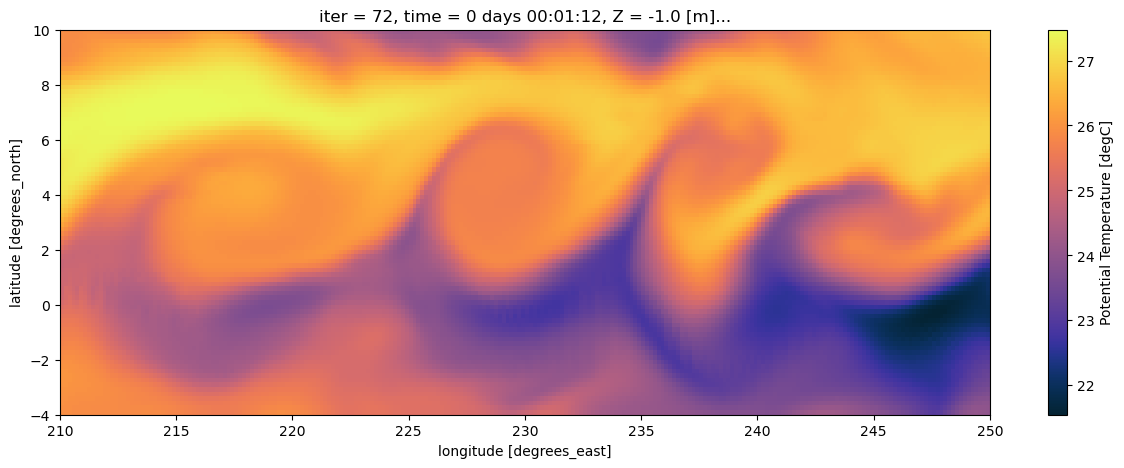

Let’s look at a more interesting and realistic example. We can look at some model output from the Tropical Pacific Ocean. This model output includes T, S, U, and V from the ocean surface in the central Pacific during the negative phase of ENSO known as La Nina.

[9]:

import xarray as xr

ds = xr.open_dataset('TPOSE6_Daily_2012_surface.nc',decode_timedelta=True)

[10]:

ds

[10]:

<xarray.Dataset> Size: 120MB

Dimensions: (time: 366, Z: 1, YC: 84, XG: 241, YG: 85, XC: 240)

Coordinates: (12/26)

iter (time) int64 3kB ...

* time (time) timedelta64[ns] 3kB 00:01:12 00:02:24 ... 01:10:48 01:12:00

* YC (YC) float64 672B -3.917 -3.75 -3.583 -3.417 ... 9.583 9.75 9.917

* XG (XG) float64 2kB 210.0 210.2 210.3 210.5 ... 249.7 249.8 250.0

* Z (Z) float64 8B -1.0

dyG (YC, XG) float32 81kB ...

... ...

rA (YC, XC) float32 81kB ...

Depth (YC, XC) float32 81kB ...

hFacC (Z, YC, XC) float32 81kB ...

maskC (Z, YC, XC) bool 20kB ...

dxF (YC, XC) float32 81kB ...

dyF (YC, XC) float32 81kB ...

Data variables:

UVEL (time, Z, YC, XG) float32 30MB ...

VVEL (time, Z, YG, XC) float32 30MB ...

THETA (time, Z, YC, XC) float32 30MB ...

SALT (time, Z, YC, XC) float32 30MB ...During La Nina the Tropical Pacific sees a large scale instability known as a Tropical Instability Wave. These are clear in SST. Let’s plot it to see what the TIWs would look like from space.

[11]:

import cmocean.cm as cmo

ds.THETA.isel(time=0).plot(cmap=cmo.thermal)

[11]:

<matplotlib.collections.QuadMesh at 0x31c5eb4d0>

[14]:

fig, ax = plt.subplots(figsize=(15, 5))

ds.THETA.isel(time=0).plot(ax=ax, cmap=cmo.thermal)

[14]:

<matplotlib.collections.QuadMesh at 0x31dcac190>

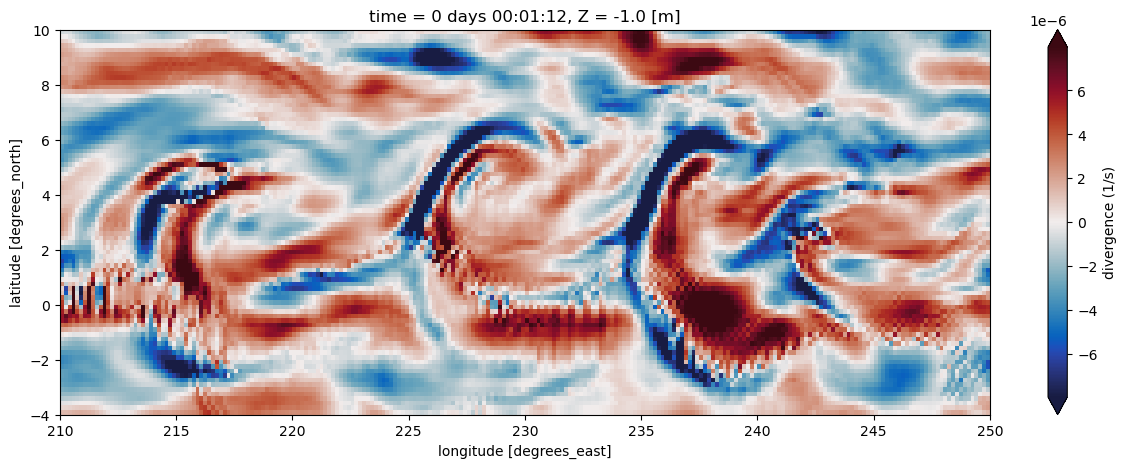

That’s cool too see! Now what if we need to do something harder to understand what’s going on? Maybe we want to learn more about the dynamics by computing vorticity or divergence? Now we need gradients, or sometimes integrals, of these variables … Enter xgcm.

Calculate Divergence with xgcm¶

xgcm is a Python package that is built around Xarray. With it you create a Grid object which can then be used to perform more complicated mathematical operations, while remaining aware of complex grid geometries. The Grid is aware of the different coordinates and their relationships to each other in space. This can be really useful for model output or observations where not all variables are on the same points in space.

[17]:

import xgcm

# create the grid object from our dataset

grid = xgcm.Grid(ds, periodic=['X','Y'])

grid

[17]:

<xgcm.Grid>

Y Axis (periodic, boundary=None):

* center YC --> outer

* outer YG --> center

Z Axis (not periodic, boundary=None):

* center Z

T Axis (not periodic, boundary=None):

* center time

X Axis (periodic, boundary=None):

* center XC --> outer

* outer XG --> center

Perhaps we want to do something like calculate divergence. As a reference equation for divergence is:

In a discretized grid you would calculate it this way:

But this assumes that the output of our model, or our data fields, are all at the same points in space. That is often not the case. Let’s inspect our fields.

[18]:

# inspect some of our variables

ds.UVEL # zonal velocity

[18]:

<xarray.DataArray 'UVEL' (time: 366, Z: 1, YC: 84, XG: 241)> Size: 30MB

[7409304 values with dtype=float32]

Coordinates: (12/13)

iter (time) int64 3kB ...

* time (time) timedelta64[ns] 3kB 00:01:12 00:02:24 ... 01:10:48 01:12:00

* YC (YC) float64 672B -3.917 -3.75 -3.583 -3.417 ... 9.583 9.75 9.917

* XG (XG) float64 2kB 210.0 210.2 210.3 210.5 ... 249.7 249.8 250.0

* Z (Z) float64 8B -1.0

dyG (YC, XG) float32 81kB ...

... ...

rAw (YC, XG) float32 81kB ...

drF (Z) float32 4B ...

PHrefC (Z) float32 4B ...

hFacW (Z, YC, XG) float32 81kB ...

maskW (Z, YC, XG) bool 20kB ...

rhoRef (Z) float32 4B ...

Attributes:

standard_name: UVEL

long_name: Zonal Component of Velocity (m/s)

units: m/s

mate: VVEL[19]:

ds.VVEL # meridional velocity

[19]:

<xarray.DataArray 'VVEL' (time: 366, Z: 1, YG: 85, XC: 240)> Size: 30MB

[7466400 values with dtype=float32]

Coordinates: (12/13)

iter (time) int64 3kB ...

* time (time) timedelta64[ns] 3kB 00:01:12 00:02:24 ... 01:10:48 01:12:00

* Z (Z) float64 8B -1.0

drF (Z) float32 4B ...

PHrefC (Z) float32 4B ...

rhoRef (Z) float32 4B ...

... ...

* YG (YG) float64 680B -4.0 -3.833 -3.667 -3.5 ... 9.5 9.667 9.833 10.0

dxG (YG, XC) float32 82kB ...

dyC (YG, XC) float32 82kB ...

rAs (YG, XC) float32 82kB ...

hFacS (Z, YG, XC) float32 82kB ...

maskS (Z, YG, XC) bool 20kB ...

Attributes:

standard_name: VVEL

long_name: Meridional Component of Velocity (m/s)

units: m/s

mate: UVELWe can see that zonal and meridional velocity are computed on different grid points (XG, YC) v (XC, YG). This is not uncommon, but it means we can’t just add/subtract/multiply the velocities because they are not located at the same points in space. How would we handle this without xgcm?

we would have to realize that these variables aren’t on the exact same points in space, even though the arrays are the same size

then we would have to figure out how far apart their respective points are, and use that to interpolate them to a common point

we would have to implement a way to handle the boundaries (interpolate, leave them empty, … )

we would have to do this for both UVEL and VVEL

If we did that and then were to switch to a model or data format where the names of coordinates are slightly different, we will have to rewrite all of our code. Instead we can use our Grid object. If, in the future, the naming/structure of the grid changes then we will update the grid to reflect the correct relationships but we won’t have to change the rest of the algorithm.

We can use the Grid to interpolate the variables to a common point:

[20]:

# interpolate U along the X axis: this will interpolate XG to the other X cordinate XC

U = grid.interp(ds.UVEL,'X')

# interpolate V along the Y axis: this will interpolate YG to the other Y cordinate YC

V = grid.interp(ds.VVEL,'Y')

display(U.dims)

display(V.dims)

/Users/ellend/miniconda3/envs/sio_software/lib/python3.13/site-packages/xgcm/grid_ufunc.py:832: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

out_dim: grid._ds.dims[out_dim] for arg in out_core_dims for out_dim in arg

('time', 'Z', 'YC', 'XC')

('time', 'Z', 'YC', 'XC')

Even better, however, the Grid object will automatically handle the interpolation for us when we go to combine the variables in the equation for divergence. The interp step above is useful for demonstration, but actually isn’t necessary at all. This further reduces the complexity of the code and the opportunities for making mistakes.

[21]:

# compute divergence

u_transport = ds.UVEL * ds.dyG * ds.hFacW * ds.drF

v_transport = ds.VVEL * ds.dxG * ds.hFacS * ds.drF

div_uv = (grid.diff(u_transport, 'X') + grid.diff(v_transport, 'Y')) / ds.rA

div_uv.dims

/Users/ellend/miniconda3/envs/sio_software/lib/python3.13/site-packages/xgcm/grid_ufunc.py:832: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

out_dim: grid._ds.dims[out_dim] for arg in out_core_dims for out_dim in arg

[21]:

('time', 'Z', 'YC', 'XC')

[22]:

import cmocean.cm as cmo

fig, ax = plt.subplots(figsize=(15,5))

div_uv.isel(time=0).plot(ax=ax,cmap=cmo.balance, robust=True, cbar_kwargs={'label': 'divergence (1/s)'})

[22]:

<matplotlib.collections.QuadMesh at 0x31ec5dd10>

Cool! In this plot we can see there is divergence/convergence along opposite sides of those temperature fronts!

The code above shows that we can take the divergence of the flow, while accounting for the grid geometry, in only 3 lines of code! This framework is robust to making mistakes due to coding errors or misunderstanding of the grid itself.

Xarray will not blindly combine variables of the same size when it knows they are not on the same points in space. That being said, nothing is fool-proof. When we use Xarray and xgcm we cannot prevent mistakes entirely. Instead we aim to spend more time on errors such as “our question is not formulated properly” rather than “the third dimension of my data isn’t the size I expected it to be, which of my indices was wrong?”.

NOTE Take a look at all of the cool Xarray related projects! Most of them are for geoscience applications. There is also an extensive set of Xarray tutorials.